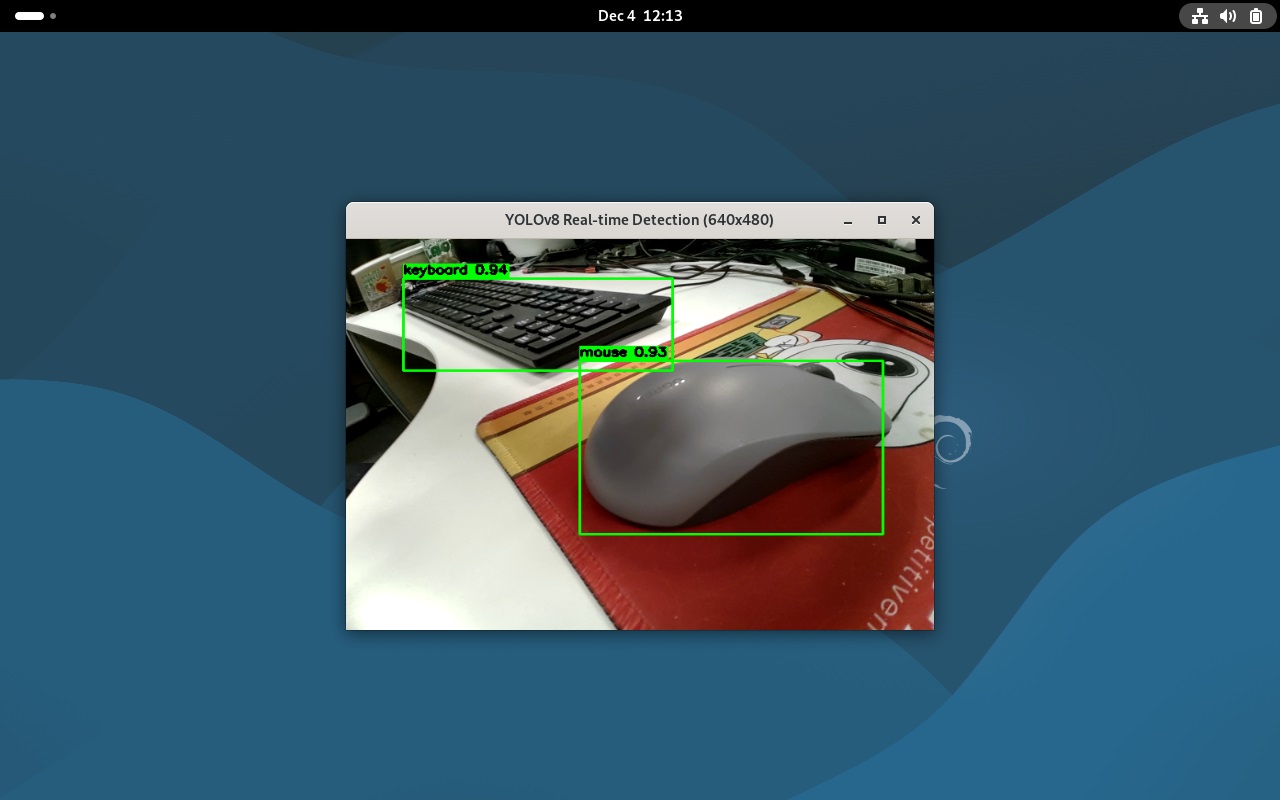

On-device deployment of YOLOv8 object detection

This document is based on the Qualcomm Quick AI Application Builder (QAI AppBuilder) tool, the wxWidgets library, and the YOLOv8 model to develop an application that captures camera streams, recognizes content, and displays it on screen.

Environment preparation

Run the following commands:

sudo apt update -y

sudo apt install -y g++ make pkg-config wx3.2-headers libwxgtk3.2-dev

sudo apt install -y libopencv-core-dev libopencv-calib3d-dev libopencv-dnn-dev

sudo apt install -y libopencv-objdetect-dev libopencv-photo-dev libopencv-stitching-dev

sudo apt install -y libopencv-video-dev libgstreamer-plugins-base1.0-dev

Download the QAI AppBuilder tool and decompress it to your user directory.

# Click the link above to download the compressed file ai-engine-direct-helper.tar and place it in ~/ai-engine-direct-helper.tar directory

# Decompress

cd ~

tar -xvf ai-engine-direct-helper.tar

Edit ai-engine-direct-helper/src/LibAppBuilder.cpp and remove the empty main function (this source file will be used during subsequent compilation, but the main function here is not needed).

Download the QAIRT tool and decompress it to your user directory.

# Click the link above to download the compressed file v2.37.1.250807.zip and place it in ~/v2.37.1.250807.zip directory

# Decompress

cd ~

unzip v2.37.1.250807.zip

Write code

Create a file named main.cpp with the following content:

#include "LibAppBuilder.hpp"

#include <iostream>

#include <filesystem>

#include <string>

#include <vector>

#include <cstddef>

#include <opencv2/opencv.hpp>

#include <opencv2/core/utils/logger.hpp>

#include <algorithm>

#include <map>

#include <thread>

#include <wx/rawbmp.h>

#include <gst/video/video-info.h>

#include <wx/log.h>

#include <wx/wx.h>

#include <gst/gst.h>

#include <gst/app/gstappsink.h>

#include <wx/dcclient.h>

#include <wx/thread.h>

#include <chrono>

#include <atomic>

namespace fs = std::filesystem;

const std::string MODEL_NAME = "yolov8_det";

const int IMAGE_SIZE = 640;

unsigned long frameCounter = 0;

// Class mapping for YOLOv8

std::map<int, std::string> class_map = {

{0, "person"},

{1, "bicycle"},

{2, "car"},

{3, "motorcycle"},

{4, "airplane"},

{5, "bus"},

{6, "train"},

{7, "truck"},

{8, "boat"},

{9, "traffic light"},

{10, "fire hydrant"},

{11, "stop sign"},

{12, "parking meter"},

{13, "bench"},

{14, "bird"},

{15, "cat"},

{16, "dog"},

{17, "horse"},

{18, "sheep"},

{19, "cow"},

{20, "elephant"},

{21, "bear"},

{22, "zebra"},

{23, "giraffe"},

{24, "backpack"},

{25, "umbrella"},

{26, "handbag"},

{27, "tie"},

{28, "suitcase"},

{29, "frisbee"},

{30, "skis"},

{31, "snowboard"},

{32, "sports ball"},

{33, "kite"},

{34, "baseball bat"},

{35, "baseball glove"},

{36, "skateboard"},

{37, "surfboard"},

{38, "tennis racket"},

{39, "bottle"},

{40, "wine glass"},

{41, "cup"},

{42, "fork"},

{43, "knife"},

{44, "spoon"},

{45, "bowl"},

{46, "banana"},

{47, "apple"},

{48, "sandwich"},

{49, "orange"},

{50, "broccoli"},

{51, "carrot"},

{52, "hot dog"},

{53, "pizza"},

{54, "donut"},

{55, "cake"},

{56, "chair"},

{57, "couch"},

{58, "potted plant"},

{59, "bed"},

{60, "dining table"},

{61, "toilet"},

{62, "tv"},

{63, "laptop"},

{64, "mouse"},

{65, "remote"},

{66, "keyboard"},

{67, "cell phone"},

{68, "microwave"},

{69, "oven"},

{70, "toaster"},

{71, "sink"},

{72, "refrigerator"},

{73, "book"},

{74, "clock"},

{75, "vase"},

{76, "scissors"},

{77, "teddy bear"},

{78, "hair drier"},

{79, "toothbrush"}

};

struct Detection {

float x1, y1, x2, y2;

float score;

int class_id;

};

float calculateIoU(const Detection& box1, const Detection& box2) {

float inter_x1 = std::max(box1.x1, box2.x1);

float inter_y1 = std::max(box1.y1, box2.y1);

float inter_x2 = std::min(box1.x2, box2.x2);

float inter_y2 = std::min(box1.y2, box2.y2);

float inter_area = std::max(0.0f, inter_x2 - inter_x1) * std::max(0.0f, inter_y2 - inter_y1);

float box1_area = (box1.x2 - box1.x1) * (box1.y2 - box1.y1);

float box2_area = (box2.x2 - box2.x1) * (box2.y2 - box2.y1);

return inter_area / (box1_area + box2_area - inter_area);

}

std::vector<Detection> nonMaximumSuppression(const std::vector<Detection>& detections,

float score_threshold = 0.45,

float iou_threshold = 0.7) {

std::vector<Detection> filtered_detections;

std::vector<Detection> candidates;

// Filter by score threshold

for (const auto& det : detections) {

if (det.score >= score_threshold) {

candidates.push_back(det);

}

}

// Sort by score in descending order

std::sort(candidates.begin(), candidates.end(),

[](const Detection& a, const Detection& b) { return a.score > b.score; });

// NMS

while (!candidates.empty()) {

// Take the highest scoring detection

Detection best_det = candidates[0];

filtered_detections.push_back(best_det);

candidates.erase(candidates.begin());

// Remove overlapping detections

for (auto it = candidates.begin(); it != candidates.end();) {

if (calculateIoU(best_det, *it) > iou_threshold) {

it = candidates.erase(it);

} else {

++it;

}

}

}

return filtered_detections;

}

void drawBoxOnImage(cv::Mat& image, const Detection& det,

float scale_x = 1.0f, float scale_y = 1.0f) {

// Scale coordinates back to original image size

int x1 = static_cast<int>(det.x1 * scale_x);

int y1 = static_cast<int>(det.y1 * scale_y);

int x2 = static_cast<int>(det.x2 * scale_x);

int y2 = static_cast<int>(det.y2 * scale_y);

// Clamp coordinates to image boundaries

x1 = std::max(0, std::min(image.cols - 1, x1));

y1 = std::max(0, std::min(image.rows - 1, y1));

x2 = std::max(0, std::min(image.cols - 1, x2));

y2 = std::max(0, std::min(image.rows - 1, y2));

if (x1 >= x2 || y1 >= y2) return; // Skip invalid boxes

// Draw rectangle (BGR color: green)

cv::rectangle(image, cv::Point(x1, y1), cv::Point(x2, y2), cv::Scalar(0, 255, 0), 2);

// Create label text

std::string label = class_map[det.class_id] + " " + std::to_string(det.score).substr(0, 4);

// Draw label background

int baseline = 0;

cv::Size text_size = cv::getTextSize(label, cv::FONT_HERSHEY_SIMPLEX, 0.5, 2, &baseline);

cv::rectangle(image, cv::Point(x1, y1 - text_size.height - baseline),

cv::Point(x1 + text_size.width, y1), cv::Scalar(0, 255, 0), cv::FILLED);

// Draw label text

cv::putText(image, label, cv::Point(x1, y1 - baseline),

cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(0, 0, 0), 2);

}

class YOLOv8Detector {

private:

LibAppBuilder libAppBuilder;

bool initialized = false;

public:

YOLOv8Detector() = default;

~YOLOv8Detector() {

if (initialized) {

libAppBuilder.ModelDestroy(MODEL_NAME);

}

}

bool Initialize() {

// Get current working directory

fs::path execution_ws = fs::current_path();

fs::path backend_lib_path = execution_ws / "libQnnHtp.so";

fs::path system_lib_path = execution_ws / "libQnnSystem.so";

fs::path model_path = execution_ws / (MODEL_NAME + ".bin");

// Disable OpenCV logging

cv::utils::logging::setLogLevel(cv::utils::logging::LOG_LEVEL_ERROR);

SetLogLevel(1);

SetProfilingLevel(1); // ProfilingLevel::BASIC

std::cout << "Initializing YOLOv8 model..." << std::endl;

int ret = libAppBuilder.ModelInitialize(MODEL_NAME, model_path.string(),

backend_lib_path.string(), system_lib_path.string());

if (ret < 0) {

std::cout << "Failed to initialize YOLOv8 model" << std::endl;

return false;

}

initialized = true;

std::cout << "YOLOv8 model initialized successfully" << std::endl;

return true;

}

std::vector<Detection> Detect(cv::Mat& image) {

if (!initialized) return {};

frameCounter++;

// Save original dimensions for scaling

int orig_height = image.rows;

int orig_width = image.cols;

float scale_x = static_cast<float>(orig_width) / IMAGE_SIZE;

float scale_y = static_cast<float>(orig_height) / IMAGE_SIZE;

// Resize image to model input size

cv::Mat resized_image;

cv::resize(image, resized_image, cv::Size(IMAGE_SIZE, IMAGE_SIZE),

0, 0, cv::INTER_LINEAR);

// Convert BGR to RGB and normalize to [0, 1]

cv::Mat rgb_image;

cv::cvtColor(resized_image, rgb_image, cv::COLOR_BGR2RGB);

cv::Mat float_image;

rgb_image.convertTo(float_image, CV_32FC3, 1.0 / 255.0);

// Prepare input tensor (NHWC format)

std::vector<float> input_data(IMAGE_SIZE * IMAGE_SIZE * 3);

memcpy(input_data.data(), float_image.data, input_data.size() * sizeof(float));

// Set performance profile

SetPerfProfileGlobal("burst");

// Prepare input/output buffers

std::vector<uint8_t*> inputBuffers;

std::vector<uint8_t*> outputBuffers;

std::vector<size_t> outputSize;

inputBuffers.push_back(reinterpret_cast<uint8_t*>(input_data.data()));

// Perform inference

std::string perfProfile = "burst";

int ret = libAppBuilder.ModelInference(MODEL_NAME, inputBuffers, outputBuffers,

outputSize, perfProfile);

// Release performance profile

RelPerfProfileGlobal();

if (ret < 0) {

std::cout << "Inference failed" << std::endl;

return {};

}

// Parse output tensors

if (outputBuffers.size() < 3) {

std::cout << "Unexpected number of output buffers: " << outputBuffers.size() << std::endl;

return {};

}

// Get output data

float* scores_data = reinterpret_cast<float*>(outputBuffers[0]);

float* class_ids_data = reinterpret_cast<float*>(outputBuffers[1]);

float* boxes_data = reinterpret_cast<float*>(outputBuffers[2]);

// Assuming output shape is [1, 8400, ...]

const int num_predictions = 8400;

// Collect all detections

std::vector<Detection> all_detections;

for (int i = 0; i < num_predictions; i++) {

Detection det;

det.score = scores_data[i];

det.class_id = static_cast<int>(class_ids_data[i]);

// Box coordinates: [x1, y1, x2, y2]

det.x1 = boxes_data[i * 4];

det.y1 = boxes_data[i * 4 + 1];

det.x2 = boxes_data[i * 4 + 2];

det.y2 = boxes_data[i * 4 + 3];

// Scale back to original image coordinates

det.x1 *= scale_x;

det.y1 *= scale_y;

det.x2 *= scale_x;

det.y2 *= scale_y;

all_detections.push_back(det);

}

// Apply NMS

std::vector<Detection> filtered_detections = nonMaximumSuppression(all_detections);

// Free output buffers

for (auto buffer : outputBuffers) {

free(buffer);

}

return filtered_detections;

}

};

class CameraFrame : public wxFrame

{

public:

CameraFrame(const wxString& title);

~CameraFrame();

private:

wxMutex m_imageMutex;

wxImage m_frameImage;

GstElement *m_pipeline = nullptr;

GstElement *m_appsink = nullptr;

YOLOv8Detector m_detector;

bool InitGstPipeline();

static GstFlowReturn NewSampleCallback(GstAppSink *sink, gpointer user_data);

void ConvertGstBufferToWxImage(GstBuffer *buffer, GstCaps *caps);

void OnPaint(wxPaintEvent& event);

void OnClose(wxCloseEvent& event);

DECLARE_EVENT_TABLE()

};

class CameraApp : public wxApp

{

public:

virtual bool OnInit();

};

IMPLEMENT_APP(CameraApp)

BEGIN_EVENT_TABLE(CameraFrame, wxFrame)

EVT_PAINT(CameraFrame::OnPaint)

EVT_CLOSE(CameraFrame::OnClose)

END_EVENT_TABLE()

bool CameraApp::OnInit()

{

gst_init(nullptr, nullptr);

CameraFrame *frame = new CameraFrame(wxT("YOLOv8 Real-time Detection (640x480)"));

frame->Show(true);

return true;

}

CameraFrame::CameraFrame(const wxString& title)

: wxFrame(nullptr, wxID_ANY, title, wxDefaultPosition, wxSize(640, 480))

{

// Initialize detector

if (!m_detector.Initialize()) {

wxLogError("Failed to initialize YOLOv8 detector");

Close();

return;

}

// Initialize pipeline and start

if (InitGstPipeline()) {

gst_element_set_state(m_pipeline, GST_STATE_PLAYING);

//wxLogMessage("GStreamer pipeline started successfully");

} else {

wxLogError("Failed to init GStreamer pipeline");

Close();

}

}

CameraFrame::~CameraFrame()

{

if (m_pipeline) {

gst_element_set_state(m_pipeline, GST_STATE_NULL);

gst_object_unref(m_pipeline);

}

gst_deinit();

}

static void OnBusError(GstBus* bus, GstMessage* msg, gpointer data)

{

GError* err = nullptr;

gchar* debug_info = nullptr;

gst_message_parse_error(msg, &err, &debug_info);

wxLogError("GStreamer error: %s", err ? err->message : "Unknown error");

g_error_free(err);

g_free(debug_info);

}

bool CameraFrame::InitGstPipeline()

{

const gchar *pipelineDesc = "qtiqmmfsrc name=camsrc ! "

"video/x-raw,format=NV12,width=640,height=480,framerate=30/1 ! "

"videoconvert ! "

"video/x-raw,format=BGR ! "

"appsink name=appsink sync=false max-buffers=1 drop=true";

// Create pipeline

m_pipeline = gst_parse_launch(pipelineDesc, nullptr);

if (!m_pipeline) return false;

GstBus* bus = gst_pipeline_get_bus(GST_PIPELINE(m_pipeline));

gst_bus_add_signal_watch(bus);

g_signal_connect(bus, "message::error", G_CALLBACK(OnBusError), this);

gst_object_unref(bus);

// Get appsink element

m_appsink = gst_bin_get_by_name(GST_BIN(m_pipeline), "appsink");

if (!m_appsink) return false;

// Configure appsink

gst_app_sink_set_emit_signals(GST_APP_SINK(m_appsink), TRUE);

gst_app_sink_set_drop(GST_APP_SINK(m_appsink), TRUE);

gst_app_sink_set_max_buffers(GST_APP_SINK(m_appsink), 1);

GstAppSinkCallbacks callbacks = {nullptr, nullptr, NewSampleCallback, nullptr, nullptr, nullptr};

gst_app_sink_set_callbacks(GST_APP_SINK(m_appsink), &callbacks, this, nullptr);

return true;

}

GstFlowReturn CameraFrame::NewSampleCallback(GstAppSink *sink, gpointer user_data)

{

CameraFrame *frame = static_cast<CameraFrame*>(user_data);

GstSample *sample = gst_app_sink_pull_sample(sink);

if (!sample) return GST_FLOW_ERROR;

GstBuffer *buffer = gst_sample_get_buffer(sample);

GstCaps *caps = gst_sample_get_caps(sample);

if (buffer && caps) {

frame->ConvertGstBufferToWxImage(buffer, caps);

frame->Refresh();

}

gst_sample_unref(sample);

return GST_FLOW_OK;

}

void CameraFrame::ConvertGstBufferToWxImage(GstBuffer *buffer, GstCaps *caps)

{

GstVideoInfo info;

if (!gst_video_info_from_caps(&info, caps)) {

wxLogWarning("Failed to get video info from caps");

return;

}

// Validate video dimensions

if (info.width <= 0 || info.height <= 0) {

wxLogWarning("Invalid video dimensions: %dx%d", info.width, info.height);

return;

}

GstMapInfo map;

if (!gst_buffer_map(buffer, &map, GST_MAP_READ)) {

wxLogWarning("Failed to map GST buffer");

return;

}

// Validate data size (RGB format should be width×height×3)

size_t expectedSize = info.width * info.height * 3;

if (map.size < expectedSize) {

wxLogWarning("Buffer size mismatch: expected %zu, got %zu", expectedSize, map.size);

gst_buffer_unmap(buffer, &map);

return;

}

// Convert GST buffer to cv::Mat for detection

cv::Mat frame(info.height, info.width, CV_8UC3, map.data);

// Perform detection

auto detections = m_detector.Detect(frame);

// Draw detections on frame

for (const auto& det : detections) {

drawBoxOnImage(frame, det);

}

// Convert BGR to RGB for wxImage

cv::cvtColor(frame, frame, cv::COLOR_BGR2RGB);

wxMutexLocker locker(m_imageMutex);

m_frameImage = wxImage(info.width, info.height, frame.data, true).Copy();

gst_buffer_unmap(buffer, &map);

}

void CameraFrame::OnPaint(wxPaintEvent& event)

{

wxPaintDC dc(this);

wxMutexLocker locker(m_imageMutex);

if (!m_frameImage.IsOk() || m_frameImage.GetWidth() <= 0 || m_frameImage.GetHeight() <= 0) {

// Draw default background

dc.SetBackground(wxBrush(wxColour(50, 50, 50)));

dc.Clear();

// Draw status text

dc.SetTextForeground(wxColour(255, 255, 255));

dc.DrawText(wxT("Waiting for camera feed..."), wxPoint(20, 20));

return;

}

// Draw image, scaled to window size

wxSize clientSize = GetClientSize();

if (clientSize.GetWidth() <= 0 || clientSize.GetHeight() <= 0)

return;

wxBitmap bmp(m_frameImage.Scale(clientSize.GetWidth(), clientSize.GetHeight(), wxIMAGE_QUALITY_BILINEAR));

dc.DrawBitmap(bmp, 0, 0, false);

}

void CameraFrame::OnClose(wxCloseEvent& event)

{

Destroy();

}

Create a Makefile

Create a Makefile with the following content:

SRCS += main.cpp

SRCS += $(wildcard ~/ai-engine-direct-helper/src/*.cpp)

SRCS += $(wildcard ~/ai-engine-direct-helper/src/Log/*.cpp)

SRCS += $(wildcard ~/ai-engine-direct-helper/src/PAL/src/linux/*.cpp)

SRCS += $(wildcard ~/ai-engine-direct-helper/src/Utils/*.cpp)

SRCS += $(wildcard ~/ai-engine-direct-helper/src/WrapperUtils/*.cpp)

SRCS += $(wildcard ~/ai-engine-direct-helper/src/PAL/src/common/*.cpp)

OBJS = $(patsubst %cpp, %o, $(SRCS))

DEPS = $(patsubst %cpp, %d, $(SRCS))

CXXFLAGS += -I/usr/include/opencv4

CXXFLAGS += -I/usr/include/wx-3.2

CXXFLAGS += -I ~/qairt/2.37.1.250807/include/QNN

CXXFLAGS += -I ~/ai-engine-direct-helper/src

CXXFLAGS += -I ~/ai-engine-direct-helper/src/Utils

CXXFLAGS += -I ~/ai-engine-direct-helper/src/WrapperUtils

CXXFLAGS += -I ~/ai-engine-direct-helper/src/Log

CXXFLAGS += -I ~/ai-engine-direct-helper/src/PAL/include

CXXFLAGS += -I/usr/include/torch/csrc/api/include

LDFLAGS += -lopencv_core -lopencv_highgui -lopencv_imgcodecs -lopencv_imgproc -lopencv_videoio

CXXFLAGS += `wx-config --cxxflags` `pkg-config --cflags gstreamer-1.0 gstreamer-app-1.0 gstreamer-video-1.0` -Wall -g

LDFLAGS += `wx-config --libs` `pkg-config --libs gstreamer-1.0 gstreamer-app-1.0 gstreamer-video-1.0`

TARGET = wx_yolo8

all: $(TARGET)

%.o: %.cpp

g++ -c -g -MMD $(CXXFLAGS) $< -o $@

$(TARGET): ${OBJS}

g++ -o $@ $^ ${LDFLAGS}

sinclude $(DEPS)

.PHONY: clean

clean:

$(RM) $(TARGET) ${OBJS} ${DEPS}

Compile program

Execute the command make to compile the program and obtain the executable file wx_yolo8.

Get model file

When running Qualcomm Model Demo for the YOLOv8-det example, the model file will be downloaded to yolov8_det/models/yolov8_det. You may also click here to download the model file.

Get runtime library files

Please obtain the following runtime library files from the decompressed v2.37.1.250807 directory.

libQnnHtp.so

libQnnHtpV68Skel.so

libQnnHtpV68Stub.so

libQnnSystem.so

Run program

Run the following commands:

FILE_PATH=$(sudo find /run/user/1001 -type f -name '.mutter-*' | head -n 1)

sudo XAUTHORITY="$FILE_PATH" \

LD_LIBRARY_PATH=lib:$LD_LIBRARY_PATH \

GST_PLUGIN_PATH=/usr/lib/gstreamer-1.0:$GST_PLUGIN_PATH \

DISPLAY=:0 ./wx_yolo8

Runtime Result

Quick deployment verification

The compiled program and all required runtime files have been packaged into yolov8-det-deploy.tar.gz, allowing for quick deployment and verification.

tar xvf yolov8-det-deploy.tar.gz

#Starting the camera requires root privileges; simply enter the sudo password as prompted.

cd yolo-deploy

./run.sh