NPU development guide

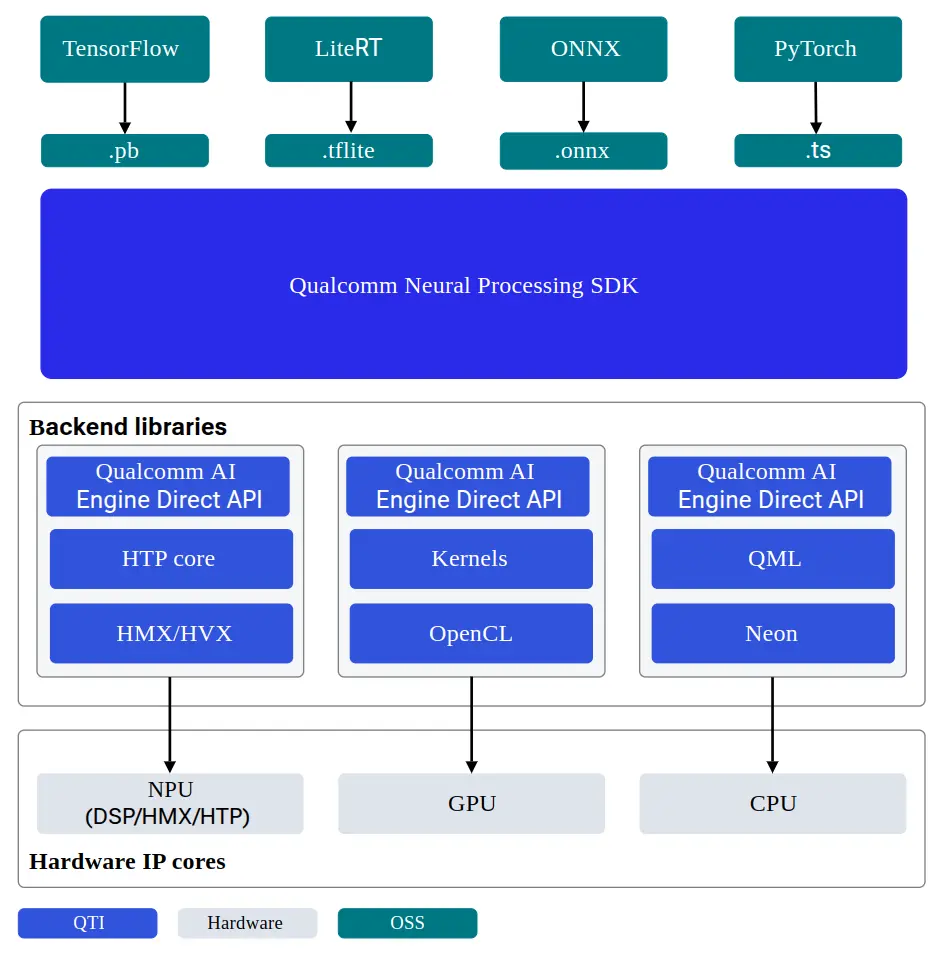

Quectel Pi H1 product SoC integrates Qualcomm ® Hexagon ™ Processor (NPU), a hardware accelerator dedicated to inference. To use NPU for model inference, QAIRT (Qualcomm® AI Runtime SDK) is required to port pre-trained models. Qualcomm ® provides a series of SDKs for NPU developers to facilitate users to deploy their models onto the NPU.

- Model quantification library: AIMET

- Model porting SDK: QAIRT

- Model application library: QAI-APP-BUILDER

- Online model conversion library: QAI-HUB

Preparations

Create Python execution environment

sudo apt install python3-numpy

python3.10 -m venv venv

. venv/bin/activate

Download testing program

Download ai-test.zip, unzip it and enter the directory.

unzip ai-test.zip

cd ai-test

Execute AI inference

Execute the program to load the model and test dataset:

./qnn-net-run --backend ./libQnnHtp.so \

--retrieve_context resnet50_aimet_quantized_6490.bin \

--input_list test_list.txt --output_dir output_bin

View results

Execute the script to view the results:

python3 show_resnet50_classifications.py \

--input_list test_list.txt -o output_bin/ \

--labels_file imagenet_classes.txt

Script output result:

Classification results

./images/ILSVRC2012_val_00003441.raw [acoustic guitar]

./images/ILSVRC2012_val_00008465.raw [trifle]

./images/ILSVRC2012_val_00010218.raw [tabby]

./images/ILSVRC2012_val_00044076.raw [proboscis monkey]

Test image collection

NPU software stack

QAIRT

QAIRT (Qualcomm ® AI Runtime ) SDK is a software package that integrates Qualcomm ® AI software products, including Qualcomm ® AI Engine Direct, Qualcomm ® Neural Processing SDK and Qualcomm ® Genie. QAIRT provides developers with a complete set of tools for porting and deploying models on Qualcomm® hardware accelerators, as well as the runtime for executing models on CPU, GPU, and NPU.

Supported inference backends:

- CPU

- GPU

- NPU

SoC architecture comparison table

| SoC | dsp_arch | soc_id |

|---|---|---|

| QCS6490 | v68 | 35 |

| QCS9075 | v73 | 77 |

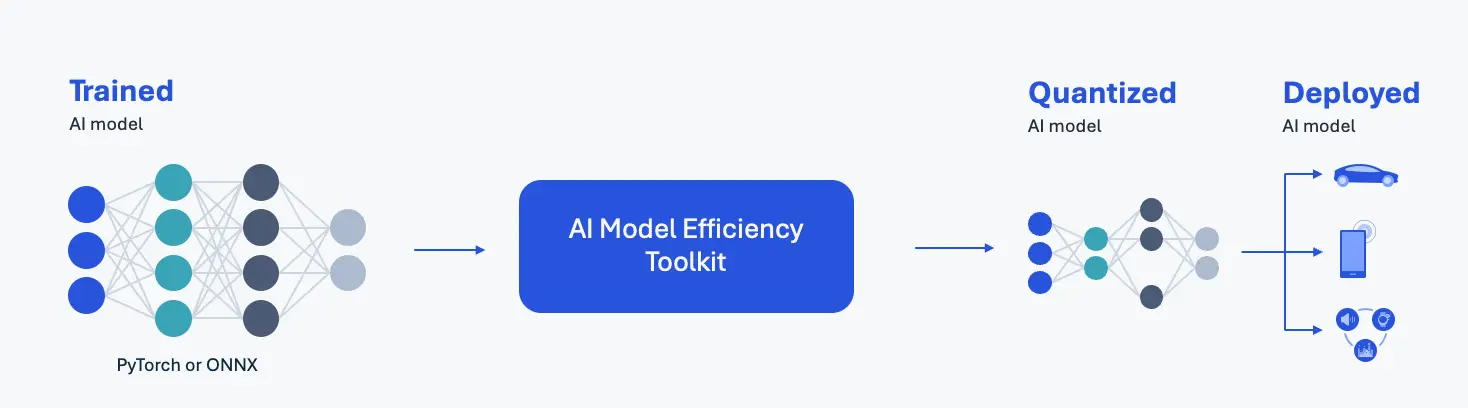

AIMET

AIMET(AI Model Efficiency Toolkit)is a quantization tool for deep learning models such as PyTorch and ONNX. AIMET enhances model runtime performance by reducing computational load and memory footprint. With AIMET, developers can quickly iterate and find the optimal quantization configuration to achieve the best balance between accuracy and latency. Quantization models exported by AIMET can be compiled and deployed to the Qualcomm NPU via QAIRT, or executed directly using ONNX Runtime.

QAI-APPBUILDER

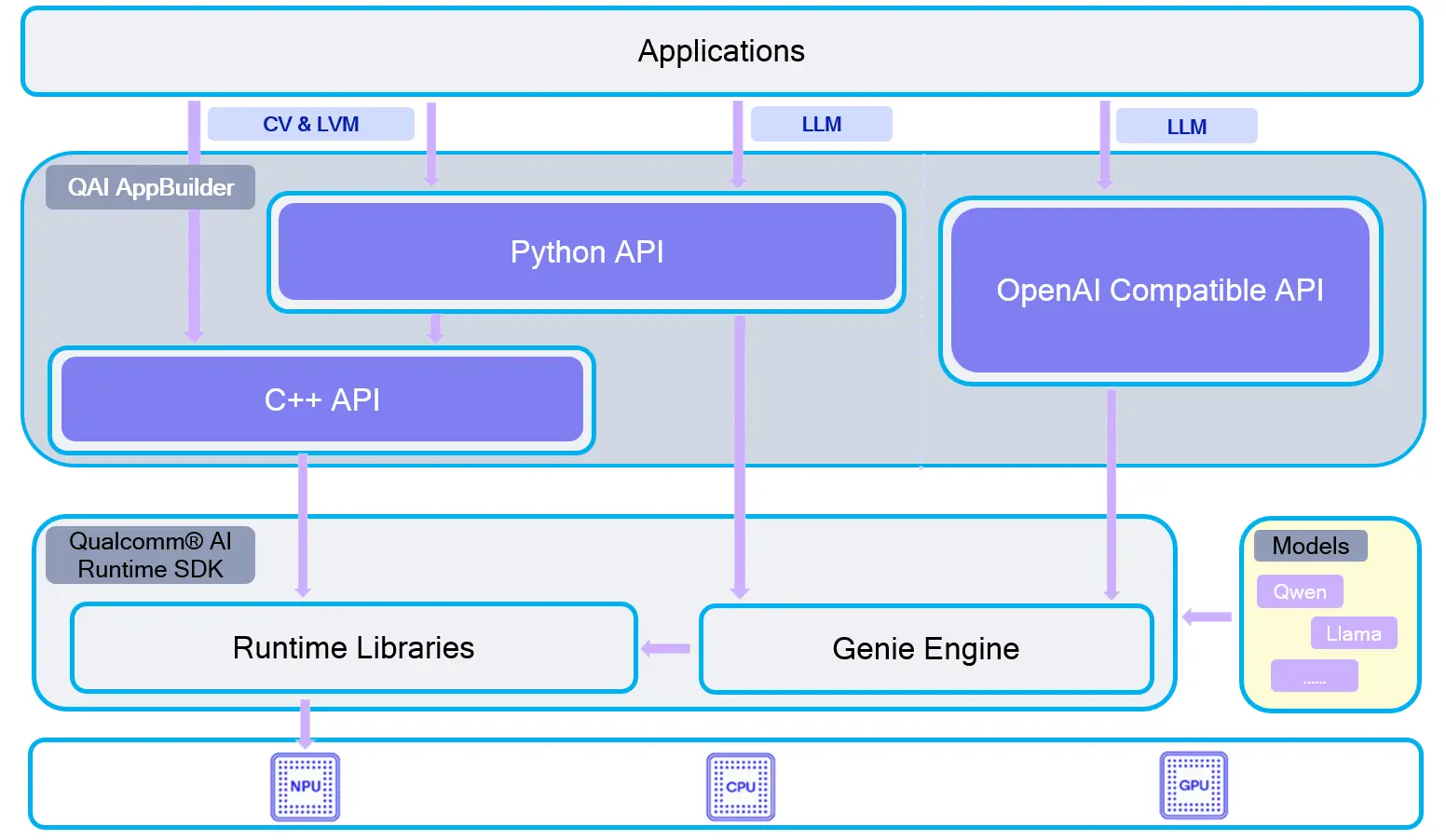

Quick AI Application Builder (QAI AppBuilder) assists developers in deploying AI models and designing AI applications on Qualcomm® SoC platforms equipped with the Qualcomm® Hexagon™ Processor (NPU) based on the Qualcomm® AI Runtime SDK. This tool encapsulates the model deployment APIs into a set of simplified interfaces for loading models onto the NPU and executing inference, significantly reducing the complexity of model deployment for developers. Additionally, QAI AppBuilder provides several Demos for reference, helping developers quickly build their own NPU Applications.

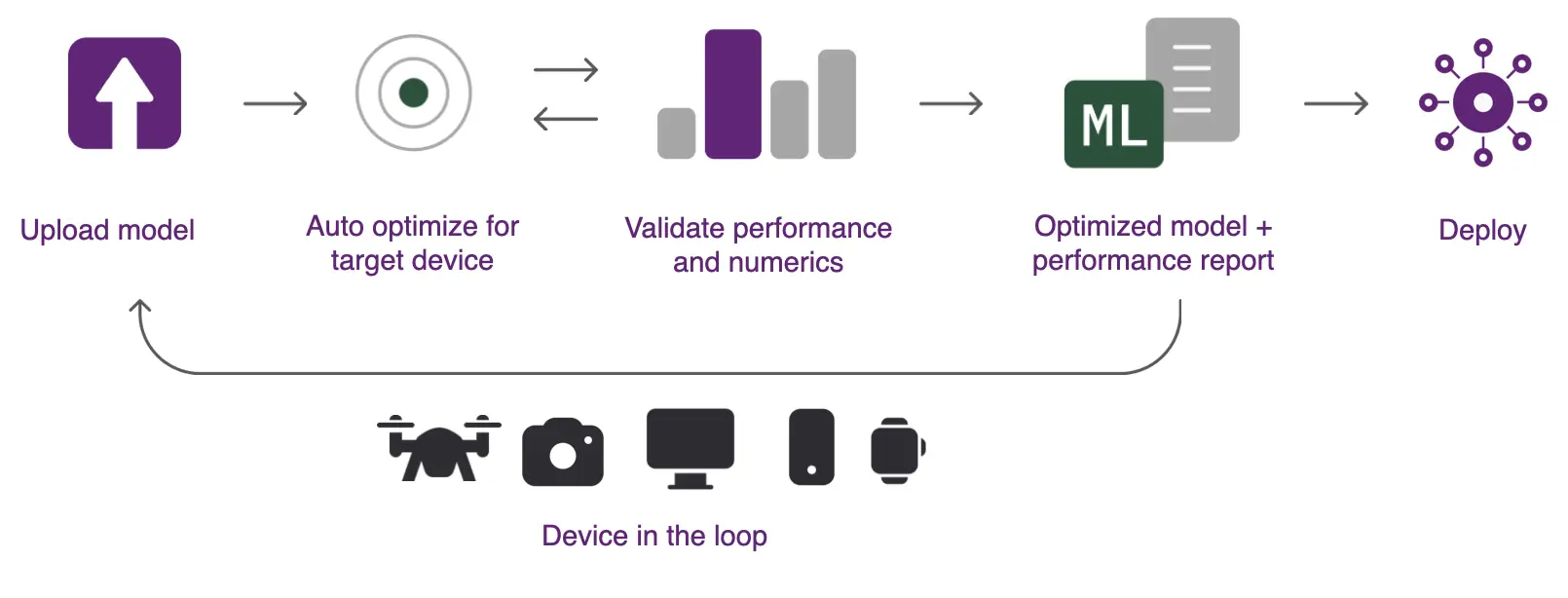

QAI-Hub

Qualcomm® AI Hub (QAI-Hub) is a one-stop online service platform for model conversion, offering online model compilation, quantization, performance analysis, inference, and download services. It automates the model conversion process from pre-trained model to device runtimes. Among them, Qualcomm® AI Hub Models (QAI-Hub-Models) is a Python library based on QAI-Hub, enabling users to perform model quantization, compilation, inference, analysis, and download through the AI Hub service.